Brussels – A month and a half after the historic agreement on the world’s first law to regulate the uses of artificial intelligence, the new Office for Artificial Intelligence is born within the European Commission, which will be tasked with “ensuring the development and coordination of policy on AI at the European level” and “overseeing the implementation and enforcement” of the law, which will now have to be ratified by the co-legislators of the EU Parliament and Council. A decision that is part of the broader innovation package to support start-ups and small and medium-sized enterprises approved today (Jan. 24) by the College of Commissioners.

The decision—effective as of today, while operations will begin in the coming months—follows up the political agreement on the Ia Act reached on December 8, 2023. As the EU executive explains in a note, the ad hoc Office will become “a central coordinating body” for specific policies at the EU level, through cooperation with other Commission departments, EU bodies, member states and all stakeholders. The Office will have “an international vocation,” promoting the Union’s approach to AI governance at the global level and, more generally, developing “knowledge and understanding” of new technologies and their “adoption and innovation.”

In addition to the in-house AI Office at the Berlaymont, the EU executive also planned a series of measures for privileged access to supercomputers for training “reliable” models for start-ups and SMEs, with the goal of “creating AI factories.” The focus will be on acquiring, upgrading, and operating supercomputers “to enable rapid machine learning and training of large-scale general-purpose AI models.” Also, the creation of a one-stop shop for algorithm development, test evaluation, and validation of large-scale AI models, and the development of “emerging applications of general-purpose model-based AI.” All this will be made possible by an amendment to the EuroHpc regulation—the one establishing the European High-Performance Computing Joint Undertaking—which will define the “new pillar for activities” of EuroHpc supercomputers, including Leonardo at the Bologna Technopole. Brussels will also push on the GenAI4EU initiative (to support applications in robotics, health, biotechnology, climate, and virtual worlds), the diffusion of common European data spaces, and two European digital infrastructure consortia: the Alliance for Language Technologies and CitiVerse (to simulate and optimize city processes, from traffic to waste management).

The EU Act on Artificial Intelligence

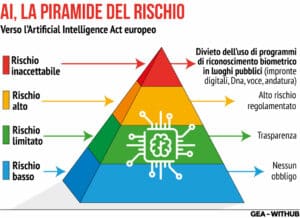

After a 36-hour marathon between December 6 and 8, 2023, the co-legislators of the EU Parliament and Council finalized a compromise agreement on the EU Artificial Intelligence Act. An understanding that maintained a horizontal level of protection, with a scale of risk to regulate artificial intelligence applications on four levels: minimal, limited, high, and unacceptable. Systems with limited risk will be subject to very light transparency requirements, such as disclosure of the fact that the content was generated by AI. Banned instead—as “unacceptable level”—cognitive behaviour manipulation systems, untargeted collection of facial images from the Internet or CCTV footage to create facial recognition databases, emotion recognition in the workplace and educational institutions, “social scoring” by governments, biometric categorization to infer sensitive data (political, religious, philosophical beliefs, sexual orientation), or religious beliefs.

For high-risk systems, there is a pre-market fundamental rights impact assessment, including a requirement to register with the appropriate EU database and the establishment of requirements on data and technical documentation to be submitted to demonstrate product compliance. One of the most substantial exceptions is the emergency procedure that will allow law enforcement agencies to use tools that have not passed the evaluation procedure, which will have to dialogue with the specific mechanism for the protection of fundamental rights. Even the use of real-time remote biometric identification systems in publicly accessible spaces has seen exemptions “subject to judicial authorization and for strictly defined lists of offences.” “Post-remote” use is exclusively for the targeted search of a person convicted or suspected of committing a serious crime, while real-time use “limited in time and location” for targeted searches of victims (kidnapping, trafficking, sexual exploitation), prevention of a “specific and current” terrorist threat and for locating or identifying a person suspected of committing specific crimes (terrorism, human trafficking, sexual exploitation, murder, kidnapping, rape, armed robbery, participation in a criminal organization, environmental crimes).

For high-risk systems, there is a pre-market fundamental rights impact assessment, including a requirement to register with the appropriate EU database and the establishment of requirements on data and technical documentation to be submitted to demonstrate product compliance. One of the most substantial exceptions is the emergency procedure that will allow law enforcement agencies to use tools that have not passed the evaluation procedure, which will have to dialogue with the specific mechanism for the protection of fundamental rights. Even the use of real-time remote biometric identification systems in publicly accessible spaces has seen exemptions “subject to judicial authorization and for strictly defined lists of offences.” “Post-remote” use is exclusively for the targeted search of a person convicted or suspected of committing a serious crime, while real-time use “limited in time and location” for targeted searches of victims (kidnapping, trafficking, sexual exploitation), prevention of a “specific and current” terrorist threat and for locating or identifying a person suspected of committing specific crimes (terrorism, human trafficking, sexual exploitation, murder, kidnapping, rape, armed robbery, participation in a criminal organization, environmental crimes).

Image created by an artificial intelligence following the instructions “robot making a speech at the EU Parliament.”

New provisions were added to the text of the agreement to account for situations where artificial intelligence systems may be used for many different purposes (general-purpose AI) and where general-purpose technology is subsequently integrated into another high-risk system. To account for the wide range of tasks that artificial intelligence systems can perform—generation of video, text, images, lateral language conversation, computation, or computer code generation—and the rapid expansion of their capabilities, it was agreed that the “high-impact” foundation models (a type of generative artificial intelligence trained on a broad spectrum of generalized, label-free data) will have to comply with several transparency requirements before they are released to the market. From drafting technical documentation to complying with EU copyright law to disclosing detailed summaries of the content used for training.

Any natural or legal person will be able to file a complaint with the relevant market supervisory authority regarding non-compliance with the EU Artificial Intelligence Act. In the event of a violation of the Regulation, the company will have to pay either a percentage of annual global turnover in the previous financial year or a predetermined amount (whichever is higher): 35 million euros or 7 per cent for violations of prohibited applications, 15 million euros or 3 per cent for violations of the law’s obligations, 7.5 million euros or 1.5 per cent for providing incorrect information. More proportionate ceilings will apply instead for small and medium-sized enterprises and start-ups.

English version by the Translation Service of Withub